Exercise 1

1. Compare QA and QC in respect of focus and assessment measures/tools

| QC (repair defects) | QA (early detection) | |

|---|---|---|

| focus | product quality at a given moment | project process (process oriented) |

| character | reactive(detect issues) | preventive(block issues) |

| starting point | requirement gathering | project planning |

| tools and measures | testing, test metrics and reports | quality metrics, reviews and audits |

2. Objectivity in process quality assurance evaluations is critical to success of project. List four objective evaluation methods.

- formal audit

- peer reviews

- desk audit

- distributed review

- poka-yoke

3. Mention four benefits of software quality assurance.

- sqa is a cost-effective investment

- increases customers trust

- improves the product's safety and reliability

- lower the expense of maintenance

- guards against system failure

4. Explain what is meant by PDCA/Deming cycle.

well defined cycle for quality assurance

5. An agile project using Scrum has many opportunities to objectively evaluate ceremonies and work products. Describe three opportunities.

- user stories are examined

- scrum master coaches the team

- feedbacks on what was built

- management or peers observe Scrum ceremonies

6. Process tailoring is a critical activity that allows controlled changes to the processes due to the specific needs of a project or a part of the organization. Mention three reasons for process tailoring.

- Accommodating the process to a new solution

- Adapting the process to a new work environment

- Modifying the process description, so that it can be used within a given project

- Adding more detail to the process to address a unique solution or constraint

- Modifying, replacing, or reordering process elements

7. List four examples of organizational process assets.

- templates

- plans

- best practices

- approved methods

- guidelines

8. Identify three similarities between QA and QC.

- both are critical components of its quality management strategy.

- improve a company’s product manufacturing process

- intend to cut expenses

9. Mention three quality assurance work products

- Criteria

- Checklists

- Evaluation reports

- Noncompliance reports

- Improvement proposals

10. replace with key term

- Problems identified when team members do not follow applicable standards, recorded processes, or procedures

- Quality assurance process to develop processes to reduce defects by avoiding or correcting

- Proven set of global best practices that drives business performance through benchmark key capabilities

- Ongoing process that ensures software product meets and complies with organization's established and standardized quality specifications

- Tangible resources used by an organization to guide the management of its projects and operations

- A process-level improvement training and appraisal program

- Proven set of global best practices that drives business performance through building and benchmarking key capabilities

- refers to how effectively a software product adheres to the core design specifications based on functional standards

- how effectively the project satisfies non-functional standards, including security, accessibility, scalability, and reliability, all of which contribute to the proper fulfillment of the predetermined requirements

- defines the structures necessary to contain the processes, process assets, and connections

- The physical structure or framework for organizing the content

- Reflects how the data is organized with in the structural architecture

- It is critical activity that allows controlled changes to the processes

- focuses on satisfaction of requirement

- ensures that the product works as intended in its target environment

noncompliance issuespoka-yokeThe Capability Maturity Model Integration (CMMI)Software quality assurance (SQA)Process AssetsThe Capability Maturity Model Integration (CMMI)The Capability Maturity Model Integration (CMMI)Software function qualitySoftware structural qualityProcess architectureStructure Process ArchitectureContent Process ArchitectureProcess adaption/tailoringVerificationValidation

11. Define three areas of interest of CMMI

- Product and service development (CMMI-DEV)

- Service establishment and management (CMMI-SVC)

- Product and service acquisition (CMMI-ACQ)

Exercise 2

1. The aim of 5 whys root cause analysis is to find the main technical causes behind the problem

- it aims to inspect a certain problem in depth until it shows you real cause

- often, issues that are considered a technical problem actually turn out to be human and process problems.

- avoid answers that are too simple / overlook important details.

- it is used for defects reported from a customer site

2. When to perform causal analysis? Which factors affect decision of effort and formality required?

when- during the task when problem or successes warrant [

تستدعي] a causal analysis - work product significantly deviates [

ينحرف] - more defects

- process performance exceeds expectations

- process does not meet its quality

- during the task when problem or successes warrant [

factors- stakeholders

- risks

- complexity

- frequency

- availability of data

3. Identify elements of process improvement proposal.

for changes proven to be effective

- areas that were analyzed including their context

- solution selection

- actions achieved

- results achieved

4. Discuss advantages of PCDA approach.

- stimulate continuous improvement

- test possible solutions

- prevent from recurring mistakes

5. Defining the problem is an important step in root cause analysis. Explain the rule that should be followed in defining the problem.

- define the problem

- collect data

- identify possible causal factors

- identify the root cause

- recommend and implement solutions

Defining the problem

Using SMART Rules

S -> SpecificM -> MeasurableA -> AttainableR -> RelevantT -> Time bound

6. Describe four types of costs of quality.

cost of good quality- prevention cost

prevent poor quality in products

- appraisal cost

measure, inspect, evaluate products to assure conformance to quality requirements

- prevention cost

cost of poor quality- internal failure cost

when a product fails to conform to quality specification before shipment to customer

- external failure cost

when a product fails to conform to quality specification after shipment to customer

- internal failure cost

7. Identify steps of internal audit process.

- planning the audit scheduling

- planning the process audit

- conducting the audit

- reporting the audit

- follow up on issue or implements found

8. Explain roles in RACI model.

- responsible

-

ensure right things happen in the right time

-

- accountable

-

ensure that something gets done correctly

-

- consult

-

people who need to be consulted

-

- inform

-

need to be kept informed

-

9. List three benefits and limitations of cost of quality system.

-

benefits- higher profitability

- more consistent products

- greater customer satisfaction

- lower costs

- meeting industry standard

- increase staff motivation

- reducing waste

-

limitations- measuring quality costs does not solve quality problems

- CoQ is merely a scoreboard for current performance

- inability to quantify the hidden quality costs (hidden factory)

10. MCQ

- The outputs of the management reviews shall include decisions and actions related to all following, except:

a. opportunities for improvement

b. need for changes to quality management system

c. audit results

d. resource needs

- Which of the following is not included in action plan?

a. affected stakeholders

c. expected cost

b. schedule

d. results achieved

c. audit resultsd. results achieved

11. Replace with Key Term(s)

- Cost incurred when a product fails to conform to quality specification before shipment to a customer.

- Principle that states that 80% of effects come from 20% of causes.

- Management meeting at planned intervals to ensure the company quality management system’s continuous suitability, adequacy and alignment with the strategic direction of the organization.

- Method for calculating the costs companies incur ensuring that products meet quality standards, as well as the costs of producing goods that fail to meet quality standards.

- Organization conduct examination at planned intervals to check if quality management system conforms to requirements of International Standard and effectively implemented & maintained.

- Percentage of an organizations total capacity or effort that is being used to overcome the cost of poor quality.

- Matrix that shows how each person contributes to a project.

- A mechanism of analyzing the defects to identify their sources.

- A cycle that is useful tool can help your team solve problems such more efficiency

- it is the cost that are generated as a result of producing defective material

internal failure costPareto Principlemanagement reviewscost of quality (COQ)internal audithidden factoryRACI Model/Matrixroot cause analysis (RCA)PDCAthe cost of poor quality (COPQ)

12. A company’s website redesign would require design and development teams to work on tasks concurrently. Each task would affect work in sales, marketing, finance and business development. Structural changes, timelines and major costs would require input and approval from senior management.Needs can be easily overlooked and requirements dropped in such complex projects.What should the project manager create to avoid missing important details and ensure clear communication throughout the project?

RACI Matrix

13. Identify root cause analysis tool

- Visual representations of a relationship between two sets of data to test correlation between variables.

- Includes a severity occurrence and detection rating to calculate risk and determine the next steps

- Looking at any problem and drilling down by asking questions and avoid answers that are too simple or overlook important details.

- Every contributing cause and its potential effects can be shown under categories and sub-categories.

- Bar graph that groups the frequency distribution to show the relative significance of causes of failure.

- Group activity to collect different viewpoints encouraging a deeper level of critical thinking.

- Scatter plots.

- FMEA.

- 5 whys.

- Fish bone diagram.

- Pareto graph.

- Brainstorming.

14. Identify type of root cause

- Picking the wrong person for a task.

- The server is not booting up.

- Instructions not accurately followed.

- Organizational.

- Physical.

- Human.

15. Identify PDCA Phase

- Incorporate your plan on a small scale .

- Identify resource needs.

- PDCA model becomes the new standard baseline.

- Audit your plan’s execution.

- Do.

- Plan.

- Act.

- Check.

16. Mention management review inputs

- status of action from previous reviews

- changes in external and internal issues

- information on the performance

- the adequacy of resources

- the effectiveness of actions

17. Mention management review outputs

- opportunities for improvement

- any need for changes

- resource needs

18. Action plan

- People responsible for implementation

- Detailed description of the action

- Description of necessary tasks

- Description of the affected areas

- Affected stakeholders

- Schedule

- Cost expected

- Estimated cost of not addressing the issue

- Description of implementation actions

- Expected impact

- Identified needed pilots

Exercise 3

1. complete

- Static review objectives include

......,....., and...... - It is very useful that test basis has measurable coverage criteria to be used as

...... - An element of human psychology called

......makes it difficult to accept information that disagrees with currently held beliefs. - Static testing types include

......,......,......, and....... - Acceptance testing of system by administration staff is usually performed in a

...... - Finding defects is not the main focus of

......testing, its goal is to build confidence in the software. - Maintenance testing involve planned releases and unplanned releases called

....... - Triggers for software maintenance include

......,......and....... ......testing is used by developers of commercial off-the-shelf (COTS) software who want to get feedback from potential/existing users, customers before the software product is put on the market.

finding defects - gaining understanding - educating participantskey performance indicator (KPI)confirmation biaspeer revuew - walkthrough - technical review - inspection(simulated) production environmentuser acceptancehot fixesmodification - migration - retirementbeta and alpha

2. Explain the testing principles in details.

- testing shows the presence of defects

testing shows that defects are present but cannot prove that there are no defects

- exaustive testing is impossible

testing everything is impossible

- defect clustering

smaller number of modules usually is responsible for most of the perational failures

- early testing

to find defects early start testing as early as possible in SDLC

- pesticide paradox

repeated tests will no longer find more defects

- testing is context dependent

done differently in different contexts (safty-critical vs e-commerce system)

- abasence of error fallacy

fixing defects does not help if the system built is unusable

3. Identify three guidelines to successful conduct of review process.

- clear predefined objectives and measurable exit criteria

- the right people are involved

- testers a valued reviewers

- any checklists are up to date

- management supports a good review process

4. Clarify the tester role during each of SDLC phases. (not answered)

5. What should a tester do in case a component is not finished and he needs to conduct component integration testing?

Use driver

6. Compare between regression and confirmation testing types.

- confirmation testing

part as defect fix verification

- after the defect is detected and fixed, the software should be re-tested

- regression testing

testing the system to check that changes have not broken previously working code

- re-run every time a change is made

- some fixes may preduced unintended side-effects that are called regressions

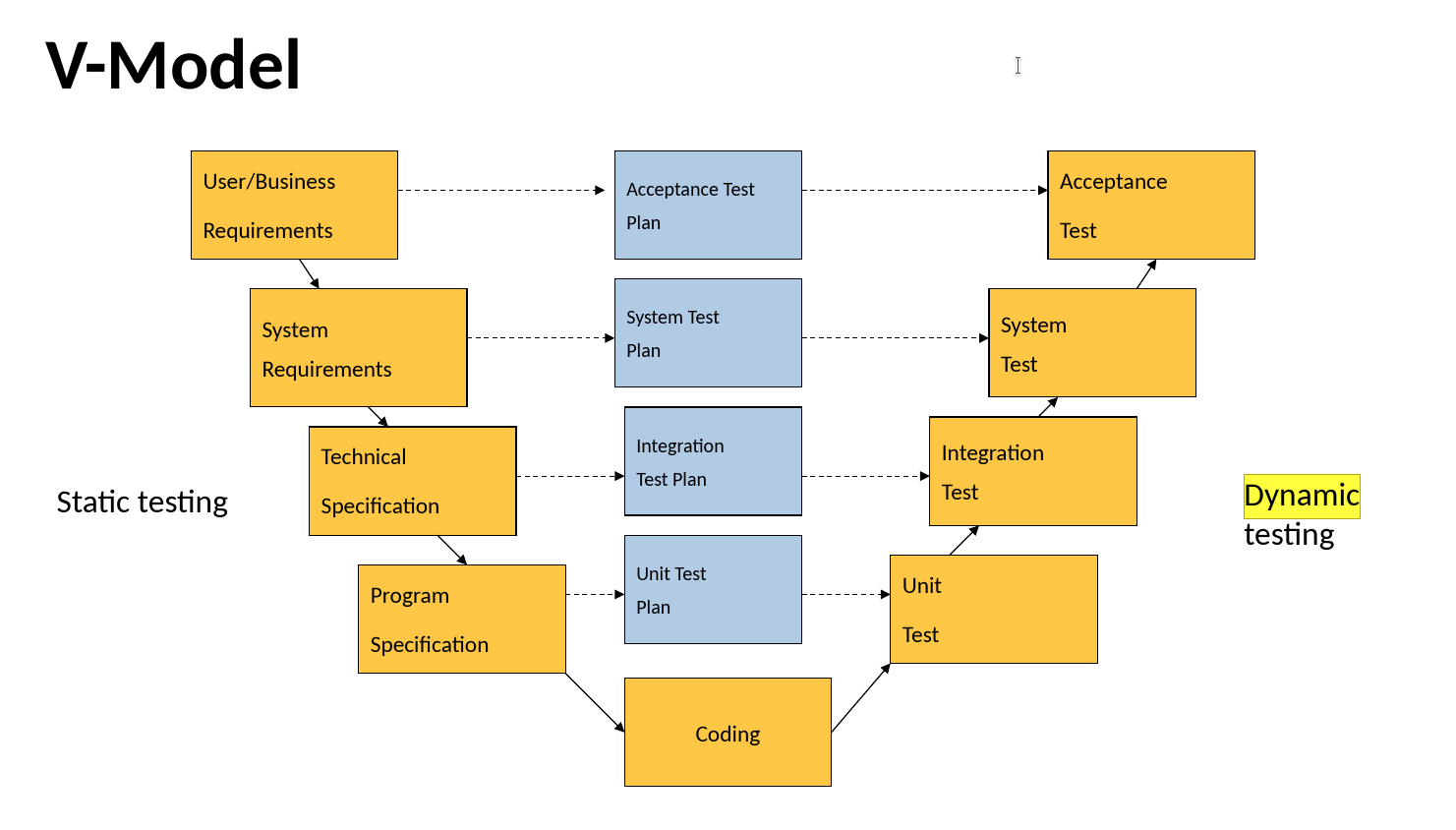

7. Illustrate steps of dynamic testing using a diagram.

8. Specify Formal Review/Inspection Activity

- Evaluating the review findings against the exit criteria to make a review decision

- Explaining the scope, objectives, process, roles, and work products to the participants

- Noting potential defects, recommendations, and questions

- Defining the entry and exit criteria

- Review meeting/issue communication and analysis

- Initiate review

- Individual preparation

- Planning

9. Whose Responsibility in Review?

- Document all the issues, problems, and open points that were identified during the meeting. With the advent of tools to support the review process, especially logging of defects/open points/decisions, there is often no need for a scribe

- Decide on the execution of reviews, allocates time in project schedules and determines if the review objectives have been met

- Lead, plan and run the review. May mediate between the various points of view and is often the person upon whom the success of the review rests

- scribe

- manager

- moderator/facilitator

10. Specify Review Technique

- Reviewers are provided with structured guidelines on how to read through the work product based on its expected usage.

- Reviewers detect issues based on set of questions based on potential defects, which may be derived from experience.

- Reviewers are provided with little or no guidance on how this task should be performed. It needs little preparation and is highly dependent on reviewer skills.

- Reviewers take on different stakeholder viewpoints in individual reviewing.

- Scenario-based/dry run.

- Checklist-based.

- Ad hoc.

- Perspective-based reading.

11. In Which Testing Level Can Defect be Found?

- Incorrect sequencing or timing of interface calls

- Incorrect in code logic

- Failure of the system to work properly in the production environment(s)

- Integration testing

- Component testing

- System testing

12. Replace with Key Term(s)

- Most formal review type, Led by the trained moderator, involves peers to examine the product, The defects found are documented in a logging list or issue log.

- Testing without having any knowledge of the interior workings of the application.

- is the detailed investigation of internal logic and structure of the code, also called: glass testing or open-box testing.

- Tests that evaluate functions that the system should perform.

- is the process of testing individual components in isolation.

- focuses on interactions and interfaces between integrated components. It is generally automated. It is often the responsibility of developers.

- focuses on interactions and interfaces between systems and packages. It is the responsibility of testers.

- a level of testing that validates the complete and fully integrated software product.

- is a stage in the testing process in which users provide input and advice on system testing.

- Users of the software test the software in a lab environment at the developer’s site.

- made available to users to allow them to experiment and to raise problems that they discover in their own environment.

- is performed against a contract’s acceptance criteria for producing custom-developed software. Acceptance criteria should be defined when the parties agree to the contract.

- is performed against any regulations that must be adhered to, such as government, legal, or safety regulations.

- is the testing of “how well” the system behaves, It involves testing a software for the requirements which are non-functional in nature but important such as performance, security, scalability, etc.

- It is a type of retesting that is carried out by software testers as a part of defect fix verification.

- It is possible that a change made in one part of the code may accidentally affect the behaviour of other parts of the code, whether within the same component, in other components.

Inspectionblack-box testingWhite-box testingfunctional testingComponent testingComponent integration TestingSystem integration testingsystem testinguser acceptance testing (UAT)Alpha testingBeta testingContractual acceptance testingRegulatory acceptance testingNon-functional testingConfirmation testingRegression testing

Exercise 4

1. Identify testing estimation technique

- Breaking down the test project into modules; sub-modules; functionalities; tasks and estimate effort/duration for each task.

- Assumes that you already tested similar applications in previous projects and collected metrics from those projects.

- Three types of estimations most likely/optimistic/pessimistic are calculated for each activity.

- Work breakdown structure is distributed to a team comprising of 3-7 members for re-estimating the tasks and final estimate is the result of the summarized estimates based on the team agreement.

work breakdown structure WBSexperience based testingPERT estimation techniquewideband delphi technique

2. Identify Test Strategy Type

- Tests rely on making systematic use of predefined set of tests/test conditions, such as taxonomy of common types of failures, list of important quality characteristics, or company-wide look-and-feel standards.

- Tests are designed and implemented, and may immediately be executed in response to knowledge gained from prior test results rather than being pre-planned.

- Tests are designed based on some required aspect of the product, such as function, business process, internal structure, non-functional characteristic.

- Tests include reuse of existing test ware (especially test cases and test data) and test suites.

- Tests driven primarily by the advice, guidance, or instructions of stakeholders, business domain experts, or technology experts, who may be outside the test team or outside the organization itself.

methodicalreactivemodel-basedregressive-aversedirected

3. Identify testing life cycle phases and deliverable from each phase.

3.1 requirement analysis

- activities

- analyzing the (SRC)

- prepare (RTM)

- prioritizing the features

- analyzing the automation feasibility

- deliverables

- RTM

- automation feasibility report

3.2 test planning

answers the question

what to test?

- activities

- prepare test plan/strategy document

- test tool selection

- test effort estimation

- determine roles and responsibilities

- deliverables

- test plan/strategy document

- effort estimation

3.3 test case development

> creation and verification of test cases

- activities

- create test cases

- review the baseline testcases

- create test data

- deliverables

- test cases

- test data

3.4 environment setup

- activities

- prepare hardware and software

- setup environment

- perform smoke test

- deliverables

- environment ready

- smoke test result

3.5 test execution

- activities

- execute test cases

- document the test results

- map defects to test cases in RTM

- track defects to closure

- deliverables

- completed RTM

- testcases updated with results

- defect report

3.6 test cycle closure

testing team meat, discuss and analyze the artifacts

- activities

- evaluate cycle completion criteria based on time, test coverage, cost, ...

- prepare test metrics

- prepare test closure

- find the defect distribution by type and severity

- deliverables

- test closure report

- test metrics

4. Mention three entry and three exit criteria.

entry criteria

- testing environment is available

- testable code is ready

- test data is ready

exit criteria

- test planned have been met

- not high severity defects

- high risk area are completely tested

5. Give an example on a test work product for each of the following test activities: test planning, monitoring, analysis, design, implementation, execution and completion.

slides 30

6. Explain three differences between test strategy and test plan.

- test strategy

a high level document that capture the approach on how we go about testing and achieve the goal

components- scope

- overview

- test approach

- testing tools

- standards

- by project manager

- from business requirement specification (BRS)

- static document

- at organization level

- test plan

a document that contains the plan for all testing activities

components- test plan identifier

- features to be tested

- features not to be tested

- pass/fail criteria

- responsibilities

- staffing and training needs

- by test lead or test manager

- from the oriduct description (SRS), use case documents

- dynamic document

- at project level

7. What is meant by test procedure?

the sequence of action for a test

8. Exercise: Specify scenario severity and priority (High Priority & High Severity , Low Priority & High Severity , High Priority & Low Severity , Low Priority & Low Severity)

- Submit button is not working on a login page and customers are unable to login to the application.

- Crash in some functionality which is going to deliver after couple of releases.

- Spelling mistake of a company name on homepage.

- FAQ page takes a long time to load.

- Company logo or tagline issues.

- On a bank website, an error message pops up when a customer clicks on transfer money button.

- Font family or font size or color or spelling issue in the application or reports.

- High Priority & High Severity

- Low Priority & High Severity

- High Priority & Low Severity

- Low Priority & Low Severity

- High Priority & Low Severity

- High Priority & High Severity

- Low Priority & Low Severity

9. replace with key term

- it is a management activity which approximates how long a task would take to complete.

- estimating the test effort based on metrics of former similar projects or based on typical values.

- estimating the test effort based on the experience of the owners of the testing tasks or by experts.

- it is a quantitative measure of the degree to which a system, system component, or process possesses a given attribute.

- minimum set of conditions that should be met (prerequisite items) before starting the software testing, used to determine when a given test activity should start.

- it is concerned with summarizing information about testing effort, during and at the end of test activity/level.

- they are the set of positive and negative executable steps of a test scenario which has a set of pre-conditions, test data, expected result, post-conditions and actual results.

- ensure that users can perform appropriate actions when using valid data.

- they are performed to try to “break” the software by performing invalid (or unacceptable) actions, or by using invalid data.

- state the problem as clearly as possible so that developers can replicate the defect easily and fix it.

- it can be defined as the impact/effect of the bug on the application. It can be Showstopper/Critical/High/Medium /Low.

- it can be defined as an impact of the bug on the customers business. Main focus on how soon/urgent the defect should be fixed.

- specifies the sequence of actions for a test, i.e. one or more Test Cases, list any initial preconditions and any activities following execution.

- connects requirements to test cases throughout the validation process.

-

- It is a report that is created once the testing phase is successfully completed by meeting exit criteria defined for the project.

- document that gives a summary of all the tests conducted.

- gives a detailed analysis of the bugs removed and errors found.

- presents the list of known issues.

Test EstimationMetrics based techniqueExpert based techniqueMetricEntry criteriaTest reportingTest casesPositive test casesNegative test casesdefect reportDefect severityDefect prioritytest procedureRequirements Traceability Matrix (RTM)Test Closure Report

10. Mention testing estimation techniques

- PERT.

- WBS (work breakdown structure).

- wideband Delphi technique.

- percentage distribution.

- Expert-based technique.

- metrics-based technique.

11. Mention Test strategy types

- Analytical strategy.

- Reactive strategy.

- methodical strategy.

- model-based strategy.

- consultative strategy.

- Regression averse strategy.

- Process compliant strategy.

12. Mention the content of test procedure

- Identifier.

- Purpose.

- Special requirements.

- Procedure steps.

Exercise 5

1. replace with key terms

- Black-box testing technique used very useful for designing acceptance tests with customer/user participation.

- The time gap between date of detection & date of closure

- Black-box testing technique useful for testing the implementation of system requirements that specify how different combinations of conditions result in different outcomes

- Testing technique where informal (not pre-defined) tests are designed, executed, logged, and evaluated dynamically during test execution. The test results are used to create tests for the areas that may need more testing

- Type of testing is used to focus effort required during testing. It is used to decide where/when to start testing and identify areas that need more attention

- Tool used to establish and maintain integrity of work products (components, data and documentation) of the system through the whole life cycle

- Black-box testing technique used only when data is numeric or sequential

- divides data into partitions in such a way that all the members of a given partition are expected to be processed in the same way.

- is an extension of (EP) equivalence partitioning that can ONLY be used when:

- the partition is ordered.

- consisting of numeric or sequential data.

- allows the tester to view the software in terms of its states, transitions between states, the inputs or events that trigger state changes (transitions) and the resulting actions.

- exercises the executable statements in the code.

-

- is measured as the number of executable statements executed by the tests divided by the total number of executable statements in the test object, normally expressed as a percentage.

- is measured as the number of decision outcomes executed by the tests divided by the total number of decision outcomes in the test object, normally expressed as a percentage.

- exercises the decisions in the code and tests the code that is executed based on all decision outcomes.

- find out the minimum number of paths which will ensure covering of all the edges.

- is where tests are derived from the tester’s skill and insight and their experience with similar applications and technologies.

- enumerate a list of possible defects and to design tests that attack these defects.

- is sometimes conducted using session-based testing to structure the activity within a defined time-box, based on a test charter containing test objectives.

- involves situations that, should they occur, may have a negative effect on a project's ability to achieve its objectives.

- involves the possibility that a work product may fail to satisfy the legitimate needs of its users/stakeholders.

- The time gap between date of detection & date of closure.

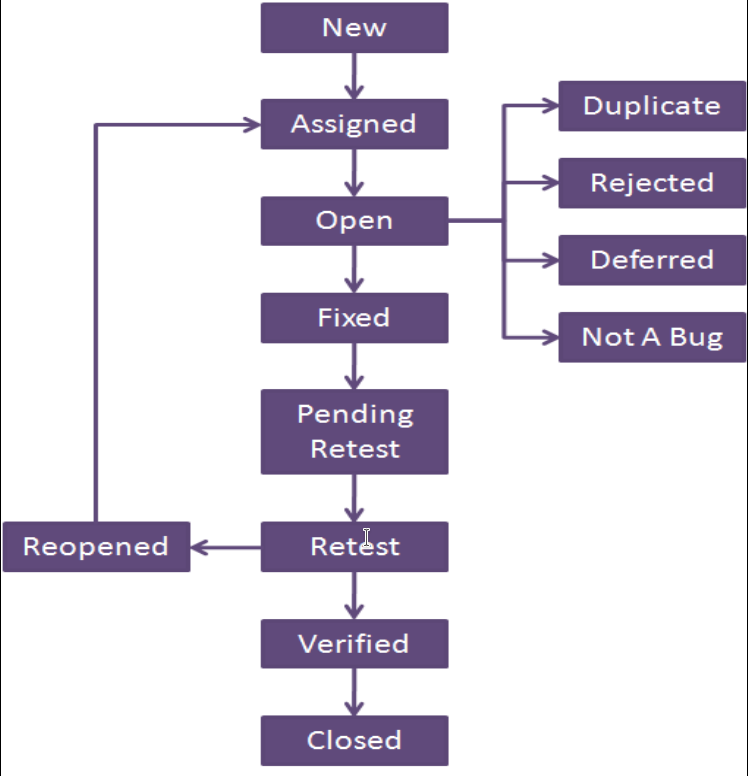

- Life cycle which a bug goes through during its lifetime, from its discovery to fixation.

- find out the shortest number of paths following which all the nodes will be covered.

usecase testingdefect agedecision table testingexploratory testingrisk based testingconfiguration managementboundary value analysisEquivalence partitioningBoundary value analysis (BVA)State transition testingStatement testingCoverageDecision testingBranch CoverageExperience based testingerror guessing techniqueExploratory testingProject riskProduct riskDefect AgeBug life cycleStatement Coverage

2. Identify Type of Project Risk

- Test environment not ready on time

- Skill, training and staff shortages

- contractual issues

- Improper attitude such as not appreciating the value of finding defects during testing

- Low quality of the design, code, or configuration data

technical issuesorganizational factorssupplier issuesorganizational factorstechnical issues

3. identify Approach used in Testing Tool

- Generic script processes action words describing the actions to be taken, which then calls scripts to process the associated test data

- Separate out the test inputs and expected results, usually into a spreadsheet, and uses a more generic test script that can read the input data and execute the same test script with different data.

- Enable a functional specification to be captured in the form of a model, such as an activity diagram. This task is generally performed by a system designer.

keyword-driven testing approachdata-driven testing approachmodel-based testing

4. How to measure the decision testing coverage?

Coverageis measured as the number of decision outcomes executed by the tests divided by the total number of decision outcomes in the test object

5. When exploratory testing is most appropriate?

Most useful where:

- there are few or inadequate specifications

- severe time pressure

6. What is the common minimum coverage standard for decision table testing?

to have at least one test case per decision rule in the table.

7. To achieve 100% coverage in equivalence partitioning, test cases must cover all identified partitions by using (one value) . Complete.

8. Statement and decision testing are most commonly used at the (component) test level. Complete.

9. Risk level of an event can be determined based on ( Likelihood/probability of an event happening) and (Impact/harm resulting from event) . Complete.

10. Who is responsible for testing in following test levels: operation acceptance/ unit/ system testing.

| level | responsibility (done by) |

|---|---|

| component integration | developer |

| system integration | independent test team |

| operational acceptance | operations/systems administration staff |

| user acceptance | business analyst , subject matter experts and users |

11. Contrast benefits and drawbacks of hiring independent testers.

- benefits

- testers are unbiased and see other and different defects

- testers can verify assumptions made by people during specification and implementation of the system

- drawbacks

- isolation from development team

- developers may lose sense of responsibility for quality

- testers may be seen as bottleneck for release

12. Resulting product risk information is used to guide test activities. Elaborate.

- determine the test techniques to be employed.

- determine the extent of testing to be carried out.

- prioritize testing to find the critical defects as early as possible.

- determine whether any non-testing activities could be employed to reduce risk (e.g., providing training to inexperienced designers).

13. Illustrate the bug life cycle using a diagram.

14. Identify three potential risks of using tools to support testing.

- time and cost for the initial introduction of the tool

- vendor may provide a poor response for support, defect fixes, and upgrades

- may be relied on too much

- new technology may not be support by the tool

- Expectations of the tool may be unrealistic

- Version control of test assets may be neglected

15. After completing the tool selection and a successful proof-of-concept evaluation, introducing selected tool into an organization generally starts with pilot project. Why?

- gain knowledge about the tool

- evaluate how the tool fits

- decide ways of using

- Understand the metrics that you wish the tool to collect and report

16. Factors of choosing test technique

- type of system.

- type of risk.

- Level of risk.

- Customer or contractual requirements.

- test objective.

- Time and budget.

- knowledge of testers.

17. Identify testing role

- Component integration testing level

- System integration testing level

- operational acceptance test level

- user acceptance test level

- developers.

- independent test team.

- operations/systems administration staff.

- business analysts, subject matter experts, and users.

18. Potential benefits of using tools to support testing

- Reduction in repetitive Manuel work.

- Greater consistency and repeatability.

- More objective assessment.

- Easier access to information about testing.

19. Tool support for specialized testing

- Usability testing.

- Security testing.

- Accessibility testing.

- Localization testing.

- portability testing.

20. Test execution tools Approaches

- Data-driven testing approach.

- Keyword-driven testing approach.

- Model-based testing tools (MBT).

21. Mention Black-box Test case design techniques

- Equivalence Partitioning (EP)

- Boundary Value Analysis (BVA)

- Decision Tables

- State Transition Testing

- Usecase Testing

22. Mention White-box (Structure based) test case design techniques

- Statement testing

- Decision testing

23. Mention Experience based test case design techniques

- Error guessing

- Exploratory testing

24. Mention Tester role

- prepare test data

- review test plan

- design test cases

- evaluate non functional characteristics

- design test environment

- execute tests

25. Success factor for tools

- introducing the tool incrementally to the organization

- adaption with the use of the tool

- provide training for the tool users

- Monitoring tool use

- provide support to the users

Exercise 6

1. complete

- The (

.....) is responsible for quality in Agile projects. - XP teams use (

.....) time to pay down (.....) by refactoring code or do research. - A cross-functional team in XP releases (

.....) frequently. - Agile projects have short iterations; thus, project team receives (

.....) and (.....) feedback on product quality throughout SDLC. - The general objective of (

.....) is to visualize and optimize the flow of work within a value- added chain. - One programmer plays (

.....) role and focuses on clean code, and compiles and runs. Second one plays role of (.....) and focuses on the big picture and reviews code for improvement or refactoring. - There are 2 levels of plans in XP: (

.....) and (.....) In both levels, there are 3 steps: (.....) - User stories must address (

.....) and (.....) characteristics. Each story includes (.....) for these characteristics used to decide when a task is (.....) - All team members, testers and non-testers, can (

.....) on both testing and non-testing activities.

- (

whole team)- (

slack time - technical debats)- (

Minimum Viable Product (MVP))- (

early - continuous)- (

kanban)- (

pilot - navigator)- (

release planning - iteration planning - exploration, commitment, and steering)- (

functional - non-functional - acceptance criteria - finished)- (

provide input)

2. replace with key terms

- the team and stakeholders collaboratively decide what are the requirements and features that can be delivered into production and when.

- the team will pick up the most valuable items from the list and break them down into tasks then estimates and a commitment to delivering at the end of the iteration.

- a naming conversion practice used in design and code to have a shared understanding between teams.

- management approach used in situations where work arrives in an unpredictable fashion.

- The value chain to be managed is visualized by a Kanban board. Each column shows a station. The items to be produced or tasks to be processed are symbolized by tickets.

- The amount of parallel active tasks is strictly limited. This is controlled by the maximum number of tickets allowed for a station and/or globally for the board.

- is the time between a request being made and a task being released.

- is calculating the actual work-in-progress time.

- is the agile form of requirements specifications explain how the system should behave with respect to a single, coherent feature or function.

- Larger collections of related features, or a collection of sub-features that make up a single complex feature.

- Collaborative authorship of the user story can use techniques such as brainstorming and mind mapping.

- is a meeting held at the end of each iteration to discuss what was successful, what could be improved, and how to incorporate the improvements and retain the successes in future iterations.

- XP teams conduct a small test or proof of concept workout

release planningIteration planningSystem metaphorKanbanKanban BoardWork in Progress (WIP) LimitKanban lead timeKanban cycle timeUser storyEpicsINVEST techniqueRetrospectiveSpike

3. Mention Aspects of agile approaches

- Agile approaches.

- Collaborative user story creation.

- Retrospectives.

- Continues integration.

- Release and iteration planning.

4. Mention agile software development approaches

- Extreme programming (XP).

- Scrum.

- Kanban.

5. Mention XP Values

- Communication.

- feedback.

- Simplicity.

- Courage.

- respect.

6. Identify Main planning process in XP practices

- Have 2 levels:

- release planning.

- iteration planning.

- Each level have 3 steps:

- Exploration.

- commitment.

- steering.

7. Compare between Scrum and Kanban

8. Explain INVEST

- I -> Independent

- N -> Negotiation

- V -> Value

- E -> Estimable

- S -> Small

- T -> Testable

9. Explain 3cs concepts

- Card : the physical media describing a user story.

- Conversation : explains how the software will be used.

- Confirmation : The acceptance criteria.

10. Mention benefits of early and frequent feedback

- avoiding requirements misunderstandings

- clarifying customer feature requests

- discovering and resolving quality problems early

- providing information to agile team regrading its productivity

Exercise 7

1. complete

- When the regression testing is automated, the Agile testers are freed to concentrate their manual testing on (

.....) and (.....) testing of defect fixes. - In agile projects, no feature is considered done until it has been (

.....) and (.....) with the system. - (

.....) iterations occur periodically to resolve any remaining defects and other forms of (.....) - Because of the heavy use of test automation, a higher percentage of the manual testing on Agile projects tends to be done using (

.....) testing. - While developers will focus on creating unit tests, testers should focus on creating automated (

.....) and (.....) tests. - Changes to existing features have testing implications, especially (

.....) testing implications. - During an iteration, any given user story will typically progress sequentially through the following test activities: (

.....) and (.....) .

new feature implemented - confirmationintegrated - testedstabilization - technical debtexperienced based testingintegration, system - system integrationregressionunit testing - feature acceptance testing

2. replace with key terms

- occur periodically to resolve any remaining defects and other forms of technical debt.

- address defects remaining from the previous iteration at the beginning of the next iteration, as part of the backlog for that iteration.

- is functional/feature testing of expected behaviors of an application as a whole.

- involves cooperative stakeholders using plain language to write acceptance tests based on the shared understanding of user story requirements.

- compare expected output with actual , mechanism that determines whether software executed correctly for a test case

- A chart used to track progress across the entire release and within each iteration

- lines of code added, modified, or deleted from one version to another

Stabilization iterationsfix bugs firstBehavior-driven development (BDD)Acceptance test-driven development (ATDD)Test oracleBurndown chartscode churn

3. Automated activities of Continuous integration process

- Static code and analysis.

- Compile.

- Unit test.

- deploy.

- integration test.

- Report.

4. Continuous Integration Challenges

- CI tools have to be introduced and maintained.

- CI process must be defined and established.

- Test automation requires additional resources and can be complex to establish.

- Thorough test coverage is essential to achieve automated testing advantages.

- Teams sometimes over-rely on unit tests and perform too little systems and acceptance testing.

5. Test Activities During Iteration

- Unit testing.

- Feature acceptance testing:

- Feature verification testing.

- Feature validation testing.

- Regression testing (parallel through iteration).

- System test level.

6. Categories of “project work products” of interest to Agile testers

- Business-oriented work product.

- Development work product.

- Test work product.

7. Organizational Options for Independent Testing

- embedded tester(s) within the team

- risk of loss of independence and loss of objective evaluation

- fully independent separate test team during the final days of each sprint

- provide unbiased evaluation

- time pressures, lack of understanding lead to problems with this approach

- independent separate test team assigned to agile team on al long-term basis at the beginning of the project

8. Agile Testing Techniques

- Test-driven development (TDD).

- Acceptance test-driven development (ATDD).

- Behavior-driven development (BDD).

9. TDD process

- Add test.

- watch test fail.

- write code.

- run test.

- refactor.

10. TDD benefits

- Code coverage.

- regression testing.

- simplifying debugging.

- system documentation.

11. BDD format

- Given (context)

- When (the action the user performs)

- Then (expected outcome)

12. Compare between TDD & BDD & ATDD

13. Mention Agile principle

- Stabilization iterations

- fix bugs first

- Pairing

- Test automation at all levels

- Developers focus on creating unit tests

- Testers should focus on creating automated tests

- Lightweight work product documentation

13. benefits of automated tests

- provide rapid feedback on product quality

- provide a living document of system functionality

- automated unit test provide feedback on build quality but not on product quality. 8

Exercise 8

1. complete

- In test pyramid, (

.....) and (.....) level tests are automated and created using (.....) tools. At (.....) and (.....) levels, automated tests are created using (.....) tools. - Testing quadrants apply to (

.....) testing only. - (

.....) strategies can be used by the testers in Agile teams to help determine the acceptable number of test cases to execute. - Iteration starts with iteration (

.....) which ends in tasks on a task board. These tasks can be (.....) in part based on level of (.....) associated with them. The aim is to know which tasks should start (.....) and involve more (.....) effort. - During iteration planning, team assesses each identified risk, which includes two activities: (

.....) and determining (.....) based on (.....) and (.....) - Information from the (

.....) is used during poker planning sessions to determine priorities of items to be completed in the (.....) - The main part of the lifecycle cost often comes from (

.....) .

- (

unit - integration - api based - system - acceptance - gui-based)- (

dynamic)- (

Risk identification, analysis, and risk mitigation)- (

planning - prioritized -quality risks - earlier - testing)- (

categorizing risks - its level of risk - impact - the likelihood of defects)- (

risk analysis - iteration)- (

maintenance after the product have been released)

2. replace with key terms

- is the first iteration of the project where many preparation activities take place.

- Each team member brings a different set of skills to the team. The team works together on test strategy, planning, specification, execution, evaluation, and results reporting.

- The team may consist only of developers, ideally there would be one or more testers.

- Testers sit together with the developers and the product owner.

- Testers collaborate with team members (stakeholders, product owner, Scrum Master).

- Technical decisions regarding design and testing are made by the team as a whole.

- The tester is committed to question and evaluate the product’s behaviour and characteristics with respect to the expectations and needs of the customers and users.

- Development and testing progress is visible on the Agile task board.

- The tester must ensure the credibility of the strategy for testing, its implementation, and execution, otherwise the stakeholders will not trust the test results.

- Retrospectives allow teams to learn from successes and from failures.

- Testing must be able to respond to change, like all other activities in Agile projects.

- Externally observable behaviour with user actions as input operating under certain configurations.

- How the system performs the specified behaviour. Referred to as quality attributes or non-functional requirements.

- A sequence of actions between an external actor (often a user) and the system, in order to accomplish a specific goal or business task.

- Activities that can only be performed in the system under certain conditions defined by outside procedures and constraints.

- Between system and outside world.

- Design and implementation constraint that will restrict the options for the developer such as embedded software respecting physical constraints such as size, weight.

- The customer may describe the format, data type, allowed values, and default values for a data item in the composition of a complex business data structure.

- communication tool allows teams to build and share an online knowledge base on tools and techniques for development and testing activities.

- are used to store source code and automated tests, manual tests and other test work products in same repository.

- Tools that generate data to populate an application’s database are very beneficial when a lot of data and combinations of data are necessary to test the application.

- Manual data entry is often time consuming and error prone, but data load tools are available to make the process reliable and efficient.

- Specific tools are available to support test-first approaches, such as BDD, TDD, ATDD.

- Tools capture and log activities performed on an application during an exploratory test session

- how many defects are found per day or per transaction

- number of defects found compared to number of user stories, effort, and/or quality attributes

- provides the test conditions to cover during a time-boxed testing session.

- uninterrupted period of testing, could last from 60-120 mints

Sprint zeroCross functionalSelf organizingCo locatedCollaborativeEmpoweredCommittedTransparentCredibleOpen to feedbackResilientFunctional behaviorQuality characteristicsScenarios (use cases)Business rulesExternal interfacesConstraintsConstraintsWikisconfiguration management toolsTest data preparation and generation toolsTest data load toolsAutomated test execution toolsExploratory test toolsDefect intensityDefect densitytest charterSession

3. Mention Test Quadrants

| Quadrant Q1 | Quadrant Q2 | Quadrant Q3 | Quadrant Q4 |

|---|---|---|---|

| Unit level | System level | System or User acceptance level | System or Operational acceptance level |

| Automated | Manual or Automated | Manual and User-Oriented | Automated |

| Functional acceptance tests ex: story tests, user experience prototypes, and simulations | ex: Exploratory testing, scenarios, process flows, usability testing, user acceptance testing, alpha testing, and beta testing | Qualities tests ex: performance, load, stress, and scalability tests, security tests, maintainability, memory management, compatibility and interoperability, data migration, infrastructure, and recovery testing. |

4. Agile Tester Skills

- Competent in test automation, TDD, BDD, ATDD, white-box, black-box, experience testing.

- positive and solution-oriented with team members.

- Actively acquire information from stakeholders

- Accurately evaluate and report test results.

- Plan and organize their own work.

- Respond to change quickly.

- Collaborative.

5. Agile Tester Role

- Understanding, implementing, and updating test strategy.

- Measuring and reporting test coverage.

- Ensuring proper use of testing tools.

- Actively collaborating with developers and business stakeholders.

- Reporting defects and working with the team to resolve them.

- Participating in team retrospectives.

6. Mention Common estimation testing effort techniques used in Agile projects based on content and risk

- planning poker.

- T-shirt sizing.

7. Test sessions include

- Survey session.

- Analysis session.

- Deep coverage.

8. Mention tools to support communication and information sharing

- wikis.

- instant messaging.

- desktop sharing.

9. Tester role in scrum projects

- Cross-functional

- Self-organizing

- Co-located

- Collaborative

- Empowered

- Committed

- Transparent

- Credible

- Open to feedback

- Resilient

10. Activities of sprint zero

- Identify the scope of the project

- Create an initial system architecture

- Plan, acquire, and install needed tools

- Create an initial test strategy

- Perform an initial quality risk analysis

- Create the task board

11. Quality Risk Analysis During Iteration Planning

- Gather the Agile team members together

- List all the backlog items for the current iteration

- Identify the quality risks

- Assess each identified risk

- categorizing the risk

- determining its level of risk

- Determine the extent of testing

- Select the appropriate test technique(s) to reduce each risk

- The tester then designs, implements, and executes tests to mitigate the risks.

12. Mention the topics that is addressed by acceptance criteria

- Functional behavior

- Quality characteristics

- Scenarios

- Business rules

- External interfaces

- Constraints

- Data definitions

13. Mention uses of physical story/task boards in agile teams

- Record stories

- capture team members' estimates on there task and calculate efforts

- Provide a visual representation

- Integrate with configuration management tools

14. Benefits of desktop sharing and capturing tools

- Used for distributed teams, product demonstrations, code reviews, and even pairing.

- Capturing product demonstrations at the end of each iteration.

General Exercises

1. Complete

- During exploratory testing, results of the most recent tests guide the (

.....). - (

.....) is an uninterrupted period of testing, could last from 60-120 mints. - Test sessions include (

.....) session to learn how it works, (.....) session to evaluate functionality, and (.....) for corner cases, scenarios, and interactions. - (

.....) provides a visual representation (via metrics, charts, and dashboards) of the current state of each user story, the iteration, and the release, allowing all stakeholders to quickly check status. - Some Agile teams opt for an all-inclusive tool called (

.....) that provides relevant features (such as task boards, burndown charts, and user stories). - (

.....) allow teams to build up an online knowledge base on tools and techniques for development and testing activities. - Risk of introducing regression in Agile development is high due to extensive (

.....). To maintain velocity without incurring a large amount of (.....), it is critical that teams invest in (.....) at all test levels early. - It is critical that all test assets are kept up to-date with each (

.....). - Testers need to allocate time in each (

.....) to review manual and automated test cases from previous and current iterations to select test cases that may be candidates for the. - (

.....), (.....), and (.....) are three complementary techniques in use among Agile teams for testing across various test levels. - Benefits of test-driven development include (

.....), (.....), and (.....). - Results of BDD are (

.....) used by the developer to develop test cases. - BDD is concerned primarily with the specification of the behavior of the system under test as a whole, thus suited for (

.....) and (.....) testing.

- (

Test Creation)- (

Session)- (

Survey session - Analysis session - Deep coverage)- (

physical story/task boards)- (

test management tools)- (

Wikis)- (

code churn - technical debt - test automation)- (

iteration)- (

iteration)- (

Test-driven development - behavior-driven development - acceptance test-driven development)- (

Code coverage - Regression testing - Simplified debugging - System documentation)- (

test classes)- (

acceptance - regression)

2. Replace with Key Term(s)

- Release that helps developer team and on-site customer to demonstrate product and focus only on least amount of work that has highest priority.

- XP team is not doing actual development during this time but acts as a buffer to deal with uncertainties and issues.

- Planning level where the requirements and features that can be delivered into production are decided based on priorities, capacity, estimations and risks factor of the team to deliver.

- In Kanban, the amount of parallel active tasks is strictly limited. This is controlled by the maximum number of tickets allowed for a station and/or globally for the board.

- Methodology used in situations where work arrives in an unpredictable fashion or when you want to deploy work as soon as it is ready, rather than waiting for other work items.

- Need to integrate changes made every few hours or on a daily basis so after every code compilation and build we have to integrate it where all the tests are executed automatically for the entire project.

- Testing technique creates a functional/behavioral test for a requirement that fails because the feature does not exist.

- Testing technique where stakeholders write acceptance tests in plain language based on shared understanding of user story requirements.

- Common effort estimation technique used in Agile projects.

- Technique used by testers to write effective user stories and maintain collaborative authorship.

- Testing where test design and test execution occur at the same time, guided by a prepared test charter.

- Chart represents the amount of work left to be done against time allocated to the release or iteration.

- Testing adequate in Agile projects in case of limited time available for test analysis and limited details of the user stories.

- Capture story cards, development tasks, test tasks created during iteration planning, using color-coordinated cards.

- Is the software product easy to use, learn and understand from the user’s perspective?

- The effort needed to make specified modifications. Is the software product easy to maintain?

- The relationship between the level of performance of the software and the amount of resources used, under stated conditions. Does the software product use the hardware,system software and other resources efficiently?

- It refers to the validation of the documents and specifications on which test cases are designed.

- The ability of software to be transferred from one environment to another. Is the software product portable?

- The ability to interact with specified systems. Does the software product work with other software applications, as required by the users?

- checking default languages, currency, date, and time format if it is designed for a particular region/locality.

- It is done in order to check how fast and better the application can recover after it has gone through any type of crash or hardware failure.

Minimum Viable Product (MVP)Slack timerelease planningWork-in-Progress WIP LimitKanbanContinuous Integration (CI)BDDATDDplanning poker and T-shirt sizingINVEST TechniqueExploratory testingburndown chartsExploratory testingAgile Task BoardUsabilityMaintainabilityEfficiencyBaselinePortabilityInteroperabilityLocalizationRecovery

3. What does collective code ownership mean?

Success or failure is a collective effort and there is no blame game. There is no one key player here, so if there is a bug or issue then any developer can fix it.

4. List categories of project work products of interest to Agile testers. Mention one example on each category.

-

Business-oriented work products

requirements specifications/user stories

-

Development work products

database ERD , code , unit tests

-

Test work products

test strategies and plans , manual and automated tests

5. In a typical Agile project, it is a common practice to avoid producing vast amounts of documentation. Comment.

- focus is more on having working software, together with automated tests that demonstrate conformance to requirements.

- This encouragement to reduce documentation applies only to documentation that does not deliver value to the customer.

6. Differentiate between lead and cycle times

- lead time : is the time between a request being made and a task being released.

- cycle time : is calculating the actual work-in-progress time

7. Automated acceptance tests are run with each check-in. True/False?

False

8. In addition to test automation, other testing tasks may also be automated. Mention three.

- Test data generation

- Loading test data into systems

- Deployment of builds into the test environments

- Restoration of test environment to a baseline

- Comparison of data outputs

9. Differentiate between defect density and intensity.

- Defect intensity : how many defects are found per day or per transaction

- Defect density : number of defects found compared to number of user stories, effort, and/or quality attributes

10. List four items included in wikis

- Product feature diagrams, feature discussions

- Tools and techniques

- Metrics, charts, and dashboards

- Conversations

11. Identify Corresponding Test Level ” Done”

- All user personas covered.

- All interfaces between units tested.

- 100% decision coverage, if possible, with careful review of infeasible paths.

- All constituent user stories, with acceptance criteria, are approved by customer.

- Testing done in a production-like environment(s).

- All features for the iteration are ready and individually tested according to the feature level criteria.

- System testing.

- Integration testing.

- Unit testing.

- Feature testing.

- System testing.

- Iteration testing.

12. Identify Test Tool

- Tools used to replace manual data loading.

- Specific tools available to support test first approaches, such as behavior-driven development, test-driven development, and acceptance test-driven development.

- Tools used to populate an application’s database are very beneficial when a lot of data and combinations of data are necessary to test the application.

- Tools capture and log activities performed on an application during an exploratory test session.

- Test data load tools.

- Automated test execution tools.

- Test data preparation and generation tools.

- Exploratory test tools.

Exams

Test Bank

1. Provided in Lectures

-

Lectures 3 & 4 & 5

-

Lectures 6 & 7 & 8

2. NOT Provided in Lectures

Lecture 1

1. software quality

ongoing process that ensures the software product meets and compiles with the organizatons's established and standarized quality specifications

make sures that the product has met its specification

- software function quality

how effectively a software product adheres to the core design based on functional standards

- software structural quality

how effectively the product satisfy non-functional standards

PDCA (deming cycle)

well defined cycle for quality assurance

- plan

- do

- check

- act

1.1 benefits of SQA ?

- sqa is a cost-effective investment

- increases customers trust

- improves the product's safty and reliability

- lower the expense of maintenance

- gaurds against system failure

2. quality assurance vs quality control

| QC (repair defects) | QA (early detection) | |

|---|---|---|

| focus | product quality | project process (process oriented) |

| character | reactive(detect issues) | preventive(block issues) |

| starting point | requirement gathering | project planning |

| tools and measures | testing, test metrics and reports | quality metrics, reviews and audits |

3. capability maturity model integration (CMMI)

is a process-level improvement training and appraiseal program

set of global best practices that drives business performance through building and benchmarking key capabilities.

it defines the following maturity levels for processes

- intial

- managed

- defined

- quantitively managed

- optimizing

3.1 CMMI addresses three area of interests

- product and service development

- service stablishment

- product and service acquisition

4. process quality assurance (PQA)

involves:

- objectively evaluating performed processes, work products against the applicable process descriptions, standards, and procedures

- identifying and documenting noncompliance issues

- providing feedback to project staff and managers on the results of quality assurance activities

evaluated objectively by objectivity by

- independent QA organizaton

- independent reviewers

- standard criterias

- checklist

objective evaluation methods

- formal audits

- peer reviews

- in-depth review of work in place where it's performed

- distributed reviews

- built-in or automated process checks

4.1 poka-yoke mechanism

quality assurance process to develop processes to reduce defects by avoiding or correcting mistakes in early decign development phases

categories of poka-yoke

- defect prevention

- defect detection

examples of work products

- Criteria

- Checklists

- Evaluation reports

- Noncompliance reports

- Improvement proposals

noncompliance issuesare problems identified with the team members don't follow applicable standards, recorded process or procedures

5. quality assurance

includes

- evaluating the process

- identifying ways that the proceess can be improved

- submitting improvement proposals

5.1 QA in scrum

has many opportunities for objective evaluation

- user stories are examined

- scrum master coaches the team

- feadbacks on what was built

- management or peers observe Scrum ceremonies

5.2 PQA in agile

- release planing

- backlog grooming

- sprint planing

- sprint execution

- sprint revie

- retrospective

6. process assets development

are tangible resources used by an organization to guide the management of its project and operations

examples

- templates

- plans

- best practices

- approved methods

- guidelines

6.1 process assets in agile

developed in sprint 0 to collect refinment suggestions

7. process architecture

defines the structures to contain the processes

aspects

- structural architecture

- the physical structure or framework for organizing the content

- content architecture

- reflect how the data is organized within the structural architecture

8. steps to update process assets

- verify the organizational's set of standard processes

- review and decide if the recommendations will be incorporated

8.1 process adaptation/tailoring

is critical activity that allow controlled changes to the processes

reasones for tailoring- Accommodating the process to a new solution

- Adapting the process to a new work environment

- Modifying the process description, so that it can be used within a given project

- Adding more detail to the process to address a unique solution or constraint

- Modifying, replacing, or reordering process elements

9. work environment standards

allow the organization and projects to benefit from common tools, training, maintenance, and cost savings

10. QA relation to validation & verification

verification

satisfaction of requirements

validation

product works as intended

Lecture 2

1. CMMI casual analysis and resolution

identify causes of selected outcomes and take action to improve process performance

- agile team that is consistently unable to complete the work defined for each sprint would look for

- chornic distractions

- using unreliable velocity data

- poorly defined user stories

- exceeding team capacity

- understanding complxeity

1.2 when to apply causal analysis?

- during the task when problem or successes warrant [

تستدعي] a causal analysis - work product significantly deviates [

ينحرف] - more defects

- process performance exceeds expectations

- process does not meet its quality

1.3 factors of efforts required for causal analysis

- stakeholders

- risks

- complexity

- frequency

- availability of data

2. root case analysis (RCA)

cmmi mechanism of analyzing the defects to identify its cause it helps to prevent defects in the later releases

2.1 type of root causes

- human cause (under skilled)

- organizational cause (vague [

غامض] instructions given by team lead, monitoring tools not in place to assess the quality) - physical cause (computer keep restaring)

2.2 RCA facilitator

- ensure no blame approach

- ensure team focas on solutions not problems

- provide training

- ensure info captured into rca document

2.3 how to perform RCA

- define the problem using

smartrules - collect data

- identify possible causal factors

- identify the root cause

- recommend and implement solutions

2.4 RCA tools

- brainstorming

- 5 whys

- fishbone diagram

- scatter plots

- pareto chart

- FMEA

2.4.1 brainstorming

is a group process in which everyone gives an idea in rotation until next round to collect different viewpoints

challenges

- wrong root cause may be selected without evidence and structured way to analyze

- will not get beyond the collective knowledge of the team

2.4.2 5 whys

- it aims to inspect a certain problem in depth until it shows you real cause.

- Avoid answers that are too simple / overlook important details.

- whys

- whys

- whys

- whys

- whys

2.4.3 fishbone diagram

known as the cause and effect diagram

shows the problem at the head of the fish-like looking diagram and the backbone with major factors

steps- write the problem at the haed of the fish

- identify the category of causes and write at end of each bone

- identify the primary cause under each category

- extend the causes to secondary, teriary, ...

2.4.4 scatter plots

visual representations of a relationship between 2 sets of data to test correlation between variable

2.4.5 pareto chart

bar graph that groups the frequency distribution to show the relative significance of causes of failure

80% of effectscome from20% of causes(pareto principle)

2.4.6 FMEA (failure mode and effect analysis)

method used during the product design to identify potential problems and solve them

- failure modes

- identifies all the ways that a problem could occur

- effects analysis

- evalute the causes and consequences of each failure mode

A

Severity Occurrence and Detection (SOD)rating to calculate risk and determine the next steps

3. addressing causes

- changing the process to remove error-prone steps

- updating a process based on previous sucessess

- eliminating non-value-added task

- automating all or part of the process

- adding process steps

3.1 actional proposal

3.2 action plans

implement the selected action proposals includes:

- people responsible

- description of the action

- description of necessary tasks

- description effected areas

- effected stakeholders

- cost

3.3 process improvement proposals

for changes proven to be effective

- areas that were analyzed including their context

- solution selection

- actions achieved

- results achieved

4. international standard

4.1 internal audit

- organization shall conduct internal audit to provide info whether the quality management system

- confirms to

- organization's own requirements

- international standard requirements

- is effectivly maintained and implemented

- confirms to

4.1. internal audit steps

- planning the audit scheduling

- planning the process audit

- conducting the audit

- reporting the audit

- follow up on issue or implements found

4.2. management reviews

4.2.1 management reviews input

- status of actions

- changes in external and internal issues

- information on the performance

- the effectiveness of actions

4.2.2 management reviews outputs

- opportunities for improvements

- any need for changes

- resource needs

4.2.3 improvements

- product and service

- correcting and preventing

- performance and effectiveness

4.2.4 nonconformity and corrective action

- react to the nonconformity and, as applicable

- evaluate the need for action to eliminate the cause(s) of nonconformity

- implement any action needed;

- review the effectiveness of any corrective action taken

- update risks and opportunities determined during planning

- make changes to the quality management system, if necessary.

4.2.5 continuous improvement

by PDCA (plan-do-check-act)

5. PDCA

cycle that is useful too that help the teams to solve problem efficiently

adv

- stimulate continuous improvement

- test possible solutions

- prevent from recurring mistakes

6. RACI model/matrix

shows how each person contributes to a project

- responsible

-

ensure right things happen in the right time

-

- accountable

-

ensure that something gets done correctly

-

- consult

-

people who need to be consulted

-

- inform

-

need to be kept informed

-

7. cost of equality (CoQ)

method for calculating the costs

cost of good quality- prevention cost

prevent poor quality in products

- appraisal cost

measure, inspect, evalute products to assure conformance to quality requirements

- prevention cost

cost of poor quality- internal failure cost

when a product fails to conform to quality specification before shipment to customer

- external failure cost

when a product fails to conform to quality specification after shipment to customer

- internal failure cost

7.1 goal & benefits of CoQ

cost of poor equality (COPQ)

costs that are generated as a result of producing defective material

7.2 CoQ benefits

- higher profitability

- more consistent products

- greater customer satisfaction

- lower costs

- meeting industry standard

- increase staff motivation

- reducing waste

7.3 CoQ limitation

- measuring quality costs does not solve quality problems

- CoQ is merely a scoreboard for current performance

- inability to quantify the hidden quality costs (hidden factory)

hidden factoryrepresent the percentage of an organizations total capacity or effort that is being used to overcome the cost of poor qualit

Lecture 3

1. software systems context

- defects occur due to:

- human beings are imperfect

- time presure

- complex code

- infrastructure complexity

- can lead to

- loss of money

- loss of time

- loss of business reputation

- injury or death

Error -> Defect -> Failure

error: