Exercise 5

1. replace with key terms

- Black-box testing technique used very useful for designing acceptance tests with customer/user participation.

- The time gap between date of detection & date of closure

- Black-box testing technique useful for testing the implementation of system requirements that specify how different combinations of conditions result in different outcomes

- Testing technique where informal (not pre-defined) tests are designed, executed, logged, and evaluated dynamically during test execution. The test results are used to create tests for the areas that may need more testing

- Type of testing is used to focus effort required during testing. It is used to decide where/when to start testing and identify areas that need more attention

- Tool used to establish and maintain integrity of work products (components, data and documentation) of the system through the whole life cycle

- Black-box testing technique used only when data is numeric or sequential

- divides data into partitions in such a way that all the members of a given partition are expected to be processed in the same way.

- is an extension of (EP) equivalence partitioning that can ONLY be used when:

- the partition is ordered.

- consisting of numeric or sequential data.

- allows the tester to view the software in terms of its states, transitions between states, the inputs or events that trigger state changes (transitions) and the resulting actions.

- exercises the executable statements in the code.

-

- is measured as the number of executable statements executed by the tests divided by the total number of executable statements in the test object, normally expressed as a percentage.

- is measured as the number of decision outcomes executed by the tests divided by the total number of decision outcomes in the test object, normally expressed as a percentage.

- exercises the decisions in the code and tests the code that is executed based on all decision outcomes.

- find out the minimum number of paths which will ensure covering of all the edges.

- is where tests are derived from the tester’s skill and insight and their experience with similar applications and technologies.

- enumerate a list of possible defects and to design tests that attack these defects.

- is sometimes conducted using session-based testing to structure the activity within a defined time-box, based on a test charter containing test objectives.

- involves situations that, should they occur, may have a negative effect on a project's ability to achieve its objectives.

- involves the possibility that a work product may fail to satisfy the legitimate needs of its users/stakeholders.

- The time gap between date of detection & date of closure.

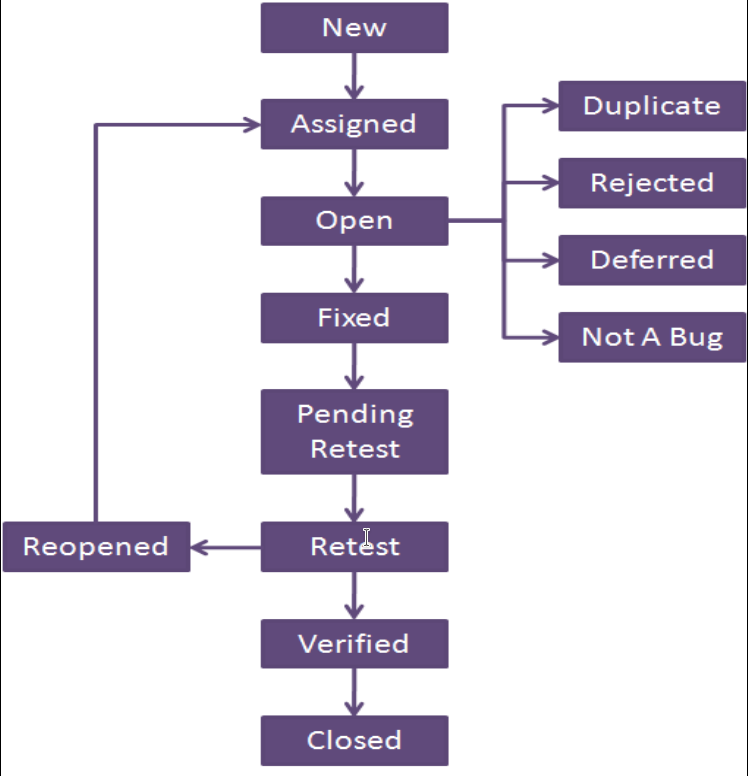

- Life cycle which a bug goes through during its lifetime, from its discovery to fixation.

- find out the shortest number of paths following which all the nodes will be covered.

usecase testingdefect agedecision table testingexploratory testingrisk based testingconfiguration managementboundary value analysisEquivalence partitioningBoundary value analysis (BVA)State transition testingStatement testingCoverageDecision testingBranch CoverageExperience based testingerror guessing techniqueExploratory testingProject riskProduct riskDefect AgeBug life cycleStatement Coverage

2. Identify Type of Project Risk

- Test environment not ready on time

- Skill, training and staff shortages

- contractual issues

- Improper attitude such as not appreciating the value of finding defects during testing

- Low quality of the design, code, or configuration data

technical issuesorganizational factorssupplier issuesorganizational factorstechnical issues

3. identify Approach used in Testing Tool

- Generic script processes action words describing the actions to be taken, which then calls scripts to process the associated test data

- Separate out the test inputs and expected results, usually into a spreadsheet, and uses a more generic test script that can read the input data and execute the same test script with different data.

- Enable a functional specification to be captured in the form of a model, such as an activity diagram. This task is generally performed by a system designer.

keyword-driven testing approachdata-driven testing approachmodel-based testing

4. How to measure the decision testing coverage?

Coverageis measured as the number of decision outcomes executed by the tests divided by the total number of decision outcomes in the test object

5. When exploratory testing is most appropriate?

Most useful where:

- there are few or inadequate specifications

- severe time pressure

6. What is the common minimum coverage standard for decision table testing?

to have at least one test case per decision rule in the table.

7. To achieve 100% coverage in equivalence partitioning, test cases must cover all identified partitions by using (one value) . Complete.

8. Statement and decision testing are most commonly used at the (component) test level. Complete.

9. Risk level of an event can be determined based on ( Likelihood/probability of an event happening) and (Impact/harm resulting from event) . Complete.

10. Who is responsible for testing in following test levels: operation acceptance/ unit/ system testing.

| level | responsibility (done by) |

|---|---|

| component integration | developer |

| system integration | independent test team |

| operational acceptance | operations/systems administration staff |

| user acceptance | business analyst , subject matter experts and users |

11. Contrast benefits and drawbacks of hiring independent testers.

- benefits

- testers are unbiased and see other and different defects

- testers can verify assumptions made by people during specification and implementation of the system

- drawbacks

- isolation from development team

- developers may lose sense of responsibility for quality

- testers may be seen as bottleneck for release

12. Resulting product risk information is used to guide test activities. Elaborate.

- determine the test techniques to be employed.

- determine the extent of testing to be carried out.

- prioritize testing to find the critical defects as early as possible.

- determine whether any non-testing activities could be employed to reduce risk (e.g., providing training to inexperienced designers).

13. Illustrate the bug life cycle using a diagram.

14. Identify three potential risks of using tools to support testing.

- time and cost for the initial introduction of the tool

- vendor may provide a poor response for support, defect fixes, and upgrades

- may be relied on too much

- new technology may not be support by the tool

- Expectations of the tool may be unrealistic

- Version control of test assets may be neglected

15. After completing the tool selection and a successful proof-of-concept evaluation, introducing selected tool into an organization generally starts with pilot project. Why?

- gain knowledge about the tool

- evaluate how the tool fits

- decide ways of using

- Understand the metrics that you wish the tool to collect and report

16. Factors of choosing test technique

- type of system.

- type of risk.

- Level of risk.

- Customer or contractual requirements.

- test objective.

- Time and budget.

- knowledge of testers.

17. Identify testing role

- Component integration testing level

- System integration testing level

- operational acceptance test level

- user acceptance test level

- developers.

- independent test team.

- operations/systems administration staff.

- business analysts, subject matter experts, and users.

18. Potential benefits of using tools to support testing

- Reduction in repetitive Manuel work.

- Greater consistency and repeatability.

- More objective assessment.

- Easier access to information about testing.

19. Tool support for specialized testing

- Usability testing.

- Security testing.

- Accessibility testing.

- Localization testing.

- portability testing.

20. Test execution tools Approaches

- Data-driven testing approach.

- Keyword-driven testing approach.

- Model-based testing tools (MBT).

21. Mention Black-box Test case design techniques

- Equivalence Partitioning (EP)

- Boundary Value Analysis (BVA)

- Decision Tables

- State Transition Testing

- Usecase Testing

22. Mention White-box (Structure based) test case design techniques

- Statement testing

- Decision testing

23. Mention Experience based test case design techniques

- Error guessing

- Exploratory testing

24. Mention Tester role

- prepare test data

- review test plan

- design test cases

- evaluate non functional characteristics

- design test environment

- execute tests

25. Success factor for tools

- introducing the tool incrementally to the organization

- adaption with the use of the tool

- provide training for the tool users

- Monitoring tool use

- provide support to the users